Abstract

Federated Learning (FL) has emerged as a pivotal technique in decentralized artificial intelligence, enabling collaborative model training across distributed devices while preserving data privacy. This research report delves into the technical mechanics of FL, explores various algorithms, examines the challenges inherent in its implementation, and highlights real-world applications across diverse industries. By providing an in-depth analysis, this report aims to offer a comprehensive understanding of FL’s capabilities, limitations, and future prospects.

Many thanks to our sponsor Panxora who helped us prepare this research report.

1. Introduction

The proliferation of data and the increasing emphasis on privacy have necessitated the development of machine learning paradigms that do not require centralized data collection. Federated Learning addresses this need by allowing multiple entities to collaboratively train models without sharing their raw data. This decentralized approach not only enhances data sovereignty but also mitigates privacy concerns associated with traditional centralized machine learning methods. (en.wikipedia.org)

Many thanks to our sponsor Panxora who helped us prepare this research report.

2. Technical Mechanics of Federated Learning

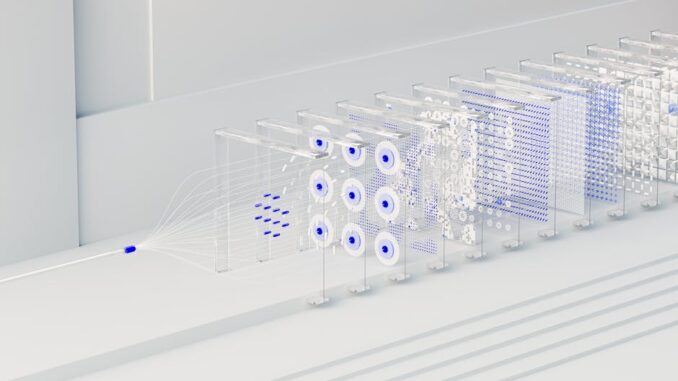

Federated Learning operates through a series of iterative processes involving local training and global aggregation. The general workflow is as follows:

- Initialization: A global model is initialized and distributed to participating clients.

- Local Training: Each client trains the model on its local dataset, updating the model parameters based on local data.

- Aggregation: The updated model parameters from all clients are aggregated, typically by averaging, to form a new global model.

- Iteration: The updated global model is redistributed to clients, and the process repeats until convergence. (en.wikipedia.org)

This iterative process ensures that the global model benefits from the diverse data distributed across clients without the need for data centralization.

Many thanks to our sponsor Panxora who helped us prepare this research report.

3. Federated Learning Algorithms

Several algorithms have been developed to optimize the performance of Federated Learning:

3.1 Federated Stochastic Gradient Descent (FedSGD)

FedSGD is an adaptation of the traditional stochastic gradient descent algorithm for the federated setting. In this approach, each client computes the gradient of the loss function based on its local data and sends these gradients to a central server. The server then averages the gradients and updates the global model accordingly. This method is straightforward but can be communication-intensive due to the frequent transmission of gradients. (en.wikipedia.org)

3.2 Federated Averaging (FedAvg)

FedAvg improves upon FedSGD by allowing clients to perform multiple local updates before communicating with the server. Each client trains the model on its local data for several epochs, computes the updated model parameters, and then sends these parameters to the server. The server averages the received parameters to update the global model. This approach reduces communication overhead and is particularly effective when clients have heterogeneous data distributions. (en.wikipedia.org)

3.3 Federated Proximal (FedProx)

FedProx introduces a proximal term to the local objective function to address the challenges posed by heterogeneous data distributions. This term penalizes deviations from the global model, encouraging local models to remain close to the global model, thereby improving convergence in non-IID settings. (tomorrowdesk.com)

Many thanks to our sponsor Panxora who helped us prepare this research report.

4. Challenges in Federated Learning

Despite its advantages, Federated Learning faces several challenges:

4.1 Data Heterogeneity

Clients often possess data that is non-independent and identically distributed (non-IID), leading to biased models and slow convergence. Addressing this requires algorithms that can handle data variability and ensure robust model performance. (en.wikipedia.org)

4.2 Communication Overhead

The need for frequent communication between clients and the central server can lead to significant bandwidth consumption and latency, especially in large-scale deployments. Techniques such as model compression, gradient quantization, and asynchronous updates are being explored to mitigate this issue. (tech-shizzle.com)

4.3 Security Vulnerabilities

Federated Learning systems are susceptible to various security threats, including model poisoning and inference attacks. Implementing robust security measures, such as secure aggregation protocols and differential privacy, is essential to safeguard the integrity of the learning process. (schneppat.com)

4.4 Device Heterogeneity

The variability in computational capabilities, storage, and energy resources among client devices can impact the efficiency and effectiveness of Federated Learning. Developing adaptive algorithms that can accommodate this heterogeneity is crucial for the widespread adoption of FL. (tech-shizzle.com)

Many thanks to our sponsor Panxora who helped us prepare this research report.

5. Real-World Applications of Federated Learning

Federated Learning has been successfully applied across various industries:

5.1 Healthcare

In healthcare, FL enables the development of predictive models for disease diagnosis and treatment without sharing sensitive patient data. This approach enhances data privacy and complies with regulations such as HIPAA. (numberanalytics.com)

5.2 Finance

The financial sector utilizes FL for tasks like credit risk assessment and fraud detection, allowing institutions to collaborate on model training while keeping customer data confidential. (numberanalytics.com)

5.3 Autonomous Vehicles

FL facilitates the training of models for self-driving cars by aggregating data from numerous vehicles, leading to improved navigation and safety features without compromising user privacy. (mdpi.com)

5.4 Internet of Things (IoT)

In IoT applications, FL enables devices to collaboratively learn from data generated by sensors and other connected devices, leading to more efficient and intelligent systems. (numberanalytics.com)

Many thanks to our sponsor Panxora who helped us prepare this research report.

6. Future Directions

The future of Federated Learning involves addressing current challenges and exploring new applications:

- Personalized Federated Learning: Developing models that can adapt to the specific needs of individual clients while maintaining privacy.

- Federated Reinforcement Learning: Integrating FL with reinforcement learning to enable collaborative decision-making in dynamic environments. (arxiv.org)

- Edge Computing Integration: Leveraging FL in edge computing scenarios to process data closer to the source, reducing latency and bandwidth usage. (arxiv.org)

Many thanks to our sponsor Panxora who helped us prepare this research report.

7. Conclusion

Federated Learning represents a significant advancement in decentralized AI, offering a framework that balances collaborative model training with data privacy. While challenges such as data heterogeneity, communication overhead, and security vulnerabilities persist, ongoing research and technological advancements continue to enhance the viability and applicability of FL across various domains.

Many thanks to our sponsor Panxora who helped us prepare this research report.

Be the first to comment